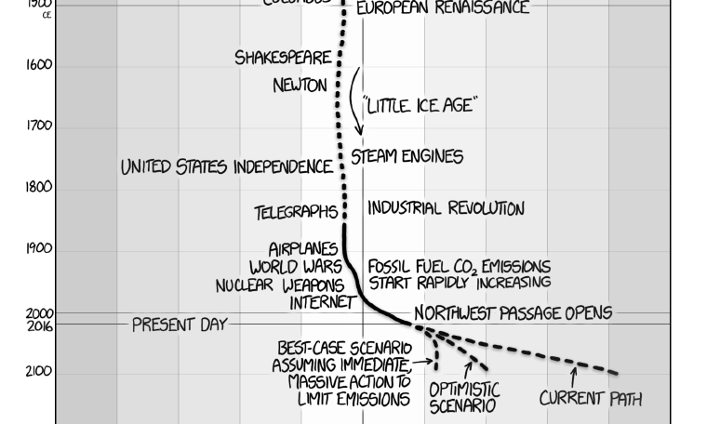

Global Warming Alarmists Promote XKCD Time Series Cartoon, Ignore Its Mistakes

Not everything is as it seems

The popular web cartoon xkcd has provided a wonderful opportunity to plug my must-read (and too expensive) book Uncertainty: The Soul of Modeling, Probability & Statistics. Buy a copy and follow along.

In this award-eligible book, which has the potential to be read by millions and which has the power to change more lives than even the Atkins Diet, I detail (in the ultimate chapter) the common errors made in time series analysis. Time series are the kind of data you see in, for example, temperature or stock price plots through time.

The xkcd post (thanks, by the way, to all the many readers who emailed about it) entitled “A Timeline Of Earth’s Average Temperature” makes a slew of fun errors, but — and I want to emphasize this — it isn’t xkcd’s fault. The picture he shows is the result of the way temperature and proxy data are handled by most of the climatological community. Mr. Munroe, the xkcd cartoonist, is repeating what he has learned from experts, and repeating things from experts when you yourself don’t know the subject is a rational thing to do.

The plot purportedly shows the average global temperature, presumably measured right above the surface, beginning in 20,000 BC and ending in the future at 2100 AD. It also shows the temperature rising anomalously in recent decades and soaring into the aether in the future. Now I’m going to show exactly why xkcd’s plot fails, but to do so is hard work, so first a sort of executive summary of its oddities.

The Gist

(1) The flashy temperature rises (the dashed lines) at the end are conjectures based on models that have repeatedly been proven wrong — indeed, they’ve never been proven right — by predicting temperatures much warmer than today’s. There is ample reason to distrust these predictions.

(2) Look closely at the period between 9000 BC until roughly 1000 AD, an era of some 10,000 years which had, if xkcd’s graph is true, temperatures much warmer than we had until the Internet. And this was long before the first barrel of oil was ever turned into gasoline and burned in life-saving internal combustion engines.

(3) There was no reason to start the graph at 20,000 BC. If xkcd had taken the timeline back further, he would have had to have drawn temperatures several degrees warmer than today’s, temperatures which outstrip the threatened warming promised by faulty climate models. And don’t forget that warmer temperatures are always associated with lush and bountiful periods in earth’s history. It’s ice and cold that kill.

(4) The picture xkcd presents is lacking any indication of uncertainty, which is the major flaw. We should not be looking at lines, which imply perfect certainty, but blurry swaths that indicate uncertainty. Too many people are too certain of too many things, meaning the debate is far from “settled.”

Unknown Unknowns

The temperature at 20,000 BC was, Munroe claims — no doubt relying on expert sources — about 4.3 C colder than the ad hoc average of temperatures from 1961-1990.

Was it actually 4.3 C cooler? How do we know? Forget the departure from the ad hoc average, which is a distraction. How do we know what the temperature was all those years ago? After all, there were no thermometers.

The answer is — get a pen and write this down, it’s crucial — we don’t know.

I’ll repeat that, because it’s a crucial point. We do not know what the temperature was. Yet here is xkcd, and climatologists, saying they do know. What gives?

Statistical modeling is what gives. This is complicated, so follow me closely. That it is complicated is why so many mistakes are made. It is tough to keep everything in mind at once, especially when one is in a hurry to get to an answer (I do not say the answer).

Temperature in history can’t be measured, but things associated with temperature can. For instance, coral grows at different rates at different temperatures. Certain chemical species dissolve in water at different rates at different temperatures. These other measures are called proxies. If we know the proxy, we can make a guess of the temperature.

Unlike temperature, proxies can often be measured historically, although never without error. That’s point (1): proxies are measured with error. After all, it’s hard to know exactly how much of a certain isotope of oxygen was present everywhere on Earth 22,000 years ago, yes? Don’t forget we’re talking about global average temperature. And then it’s not always clear that the historical dates are quite accurate either. Can you tell the difference between 22,000 and 21,999 years ago in an ancient chunk of coral? These are the two classes of measurement error. They have different effects, as we’ll see.

A statistical model between the proxy and the temperature, both measured in present day, is then built. Statistical models have internal guts, things called parameters. One or more of these parameters will relate how the change in the proxy is associated with the change in the uncertainty of the temperature. Not change of the temperature: change of the uncertainty in the temperature. Usually, and unfortunately, what happens is that these parameters are mistaken as the temperature (and not its uncertainty). That’s point (2): instead of reporting on parameters, about which we can be as certain as possible, what these models should but don’t report are predictions.

That is, the proxy measured at 20,000 BC is fed into the model and the parameter effect is reported. The uncertainty in this parameter effect, sometimes called a confidence interval, might also be reported. But these are always beside the point. Who cares about some dumb model? What’s needed is a prediction of what the temperature might have been given the assumed/measured value of the proxy. Not only the prediction, but also some indication of the uncertainty in the prediction should be given. (Even these predictions will be too sure because we can’t check the models’ validity in times historical; the reader here understands I am simplifying for a general audience.)

The website xkcd did not show any uncertainty. So he, and climatologists, make it appear that we know to a great degree just what the global average temperature was a long time ago. Which we don’t; not exactly.

The errors made thus far fool modelers into thinking they know much more than they do. The parametric confidence intervals tell us of the model guts and not about the temperature, and so using only these intervals guarantees over-certainty, and a lot of it. That we don’t incorporate the two kinds of proxy measurement error also guarantees even more over-certainty. I say guarantees: this is not a supposition, but a logical truth.

The combined effect of forgetting about the measurement error is to produce uncertainty bounds that are again too narrow (because of the first measurement error type), and they produce graphs which are way, way too smooth (because of the second).

Have you ever noticed how smooth and cocksure plots of historical temperature are, like xkcd’s? These errors are why. What we should actually see, instead of xkcd’s smooth, pretty line, is a vast wide blur, which is blurrier the farther back in time we go, and more focused the nearer to our time.

Now look at the end of xkcd’s plot, where more errors are found. Start around Anno Domini 1900. By that time, thermometers are on the scene, meaning that new kinds of models to form global averages are being used. These also require uncertainty bounds, which aren’t shown. Anyway, xkcd, like climatologists, stitches all these disparate data sources and models together as if the series is homogeneous through time, which it isn’t.

Here’s point (3): Because we can measure temperature in known years now (and not then), and we need not rely on proxies, the recent line looks sharper and thus tends to appear to bounce around more. It still requires fuzz, some idea of uncertainty, which isn’t present, but this fuzz is much less than for times historical.

The effect is like looking at foot tracks on a beach. Close by, the steps appear to be wandering vividly this way or that, but if you peer at them into the distance they appear to straighten into a line. Yet if you were to go to the distant spot, you’d notice the path was just as jagged. Call our misperceptions of time series on which xkcd relies for his joke statistical foreshortening. This is an enormous and almost always unrecognized problem in judging uncertainty.

There is one error left. From Anno Domini 2016 to 2100 xkcd shows dashed lines which are claimed to be temperatures. They aren’t, of course; at least not directly. They are guesses from climate models. These too should have uncertainty attached, but don’t.

The type of uncertainty xkcd should have put is again not “parametric” but predictive. We could rely on what the models themselves are telling us to get the parametric, or we could rely on the actual performance of models to get the predictive. Only the actual performance counts.

I’ll repeat that, too. Only actual model performance counts. That’s how science is supposed to work. We trust only those models that work.

Can we say anything about actual performance? Yes, we can. We can say with certainty that the models stink. We can say that models have over a period of many years predicted temperatures greater than we have actually seen. We can say that the discrepancy between the models’ predictions and reality is growing wider. This implies the uncertainty bounds on xkcd’s dashed lines should be healthy and wide. This is why xkcd’s, and the climatologists’, “current path” is not too concerning. There is no reason to place much warrant in the models’ predictions.

If I could draw a stick figure, I would do so here with the character saying, “Buy my book to learn more.”